Last Updated on October 17, 2024 by Content Team

Web Scrapers are tools designed to extract/gather data in a website via a crawling engine, usually made in Java, Python, Ruby and other programming languages.

Web Scrapers are also called Web Data Extractors, Data Harvesters, and Crawlers most of which are web-based or can be installed on local desktops.

Web scraping software enables webmasters, bloggers, journalists and virtual assistants to harvest data from a certain website, whether text, numbers, contact details and images, in a structured way which cannot be done easily through manual copying and pasting due to a large amount of data that needs to be scrapped. Typically it transforms the unstructured data on the web, from HTML format into structured data stored in a local database or spreadsheet.

Web Scraper Usage in the Real World

Online marketers rely on web scraping to gain a competitive edge by extracting crucial data from competitors’ websites, including high-converting keywords, valuable backlinks, emails, and traffic sources. This data enables them to optimize strategies and outpace the competition. For example, an e-commerce site might use web scraping to monitor competitors’ pricing in real-time, allowing them to adjust prices dynamically and stay competitive.

Beyond marketing, web scraping serves a wide range of industries. Retailers, like Amazon or Walmart, use scrapers for price comparison across platforms to ensure they remain competitive. Financial analysts rely on scraping weather data monitoring for investment decisions in agriculture or energy sectors. Publishers use scrapers for website change detection, such as keeping track of regulatory updates or stock news.

Researchers, for instance, academic institutions, use web scraping for gathering large datasets for market research or sentiment analysis on social media platforms. In the tech world, web mashups combine data from various sources into a unified dashboard, such as displaying live flight data alongside traffic updates.

Additionally, businesses frequently utilize scrapers to create infographics (great for link building), compiling data from diverse sources into visually engaging formats, and for web data integration by pulling structured data into internal systems, improving decision-making.

List of Best Web Scraping Software 2024

Hundreds of Web Scrapers are available today for commercial and personal use, from simple browser extensions to full on cloud-based scraping tools. Since my initial post about the best proxies to buy, many of these proxy providers now also offer web scraping options.

If you are looking for a a quick chrome extension, try one of these:

Web Scraper: A Chrome extension that allows you to extract data from websites easily. You can use its visual point-and-click interface or write custom XPath selectors to scrape data.

Data Miner: Another Chrome extension that lets you extract data from websites. It supports pagination, JavaScript execution, and various other advanced features.

Scraper: A simple Chrome extension for scraping data from web pages. It allows you to scrape text, links, and images, and supports custom XPath selectors.

If you are looking for something a bit more substantial, check out these tools:

Contents

- 1 ScraperAPI

- 2 ScrapingBee

- 3 Octoparse Web Scraper

- 4 Helium Scraper

- 5 Import.io

- 6 Content Grabber

- 7 HarvestMan

- 8 Scraperwiki [Commercial]

- 9 FiveFilters.org [Commercial]

- 10 Kimono [Update – now discontinued]

- 11 Mozenda [Commercial]

- 12 80Legs [Commercial]

- 13 ScrapeBox [Commercial]

- 14 Scrape.it [Commercial]

- 15 Scrapy [Free Open Source]

- 16 Needlebase [Commercial]

- 17 OutwitHub [Free]

- 18 irobotsoft [Free}

- 19 iMacros [Free]

- 20 InfoExtractor [Commercial]

- 21 Google Web Scraper [Free]

- 22 Webhose.io (freemium)

- 23 Apify

- 24 Expired Domain Name Web Scrapers

ScraperAPI

Are you in search of a great web scraping tool that handles browsers, proxies, and CAPTCHAs? Your best option is to opt for Scraper API which helps developers get raw HTML from websites with a simple API call.

ScraperAPI also has a no-code platform with dedicated scrapers for endpoints like Google search results.

Scraper API also manages its internal pool of over a hundred thousand residential proxies and data centers that come from different proxy providers. It has a smart routing logic which routes requests through different subnets and throttles request to avoid IP bans and CAPTCHAs.

How much does Scraper API cost? Scraper API has three different plans. Hobby plans cost $29.oo per month. Startup cost $99.0 per month while business cost $249.0 per month. Scraper API also offers a free plan that comes with unlimited features.

Features of Scraper API

Some of the fantastic features of Scraper API include:

• 20+ Million IPs: Scraperapi rents more than 20 million IP addresses from different service providers located in various parts of the world. It also has a mixture of residential, mobile and data center proxies that helps to increase reliability and avoid IP blocks.

• 12+ Geolocations: This tool offers geo-targeting to about 12 countries which help developers to get localized and accurate information worldwide without renting multiple proxy pools.

• Easy Automation: One beautiful thing about Scraper API is that it puts developers first buy handling lots of complexities, automating IP rotation, CAPTCHA solving, and rendering javascript with the headless browser so that developers can scrape any page they need just with a simple API call.

• 99.9% Uptime Guarantee: This web tool understands the importance of data collection to all businesses, and that is why it provides class reliability and 99.9% uptime guarantee to all small and large scale customers.

• Professional Support: Scraperapi also provides fast and friendly customer support 24 hours daily.

• Fully Customizable: Scraper API is a very easy tool to start with. Features like request type, requester headers, IP geolocation and more can also be customized to suit your business need.

• Fast and Reliable: Scrape API is fast and reliable with speed of up to 100Mb which makes it great for writing speedy web crawlers and easily build scalable web scrapers.

• Never Get Blocked: One of the most frustrating parts of web scraping is dealing with CAPTCHAs and IP blocks. However, Scraper API rotates IP address on request from pools and automatically retries failed request so you can never get blocked.

• Unlimited Bandwidth: Also Scraper API does not charge for bandwidth but for a successful request which makes it easier for the customer to estimate usage and keep costs down for large scale web scraping jobs.

Finally, Scraper API is an excellent web scraping tool for freelancers and small and medium scale business owners. It has an amazing pool of proxies that makes it easy for developers to crawl e-commerce listings, reviews, social media sites, real estate listing and many more.

ScrapingBee

ScrapingBee is an API for web scraping.

The web is becoming increasingly difficult to scrape. There are more and more websites using single page application frameworks like Vue.js / Angular.js / React.js and you need to use headless browsers to extract data from those websites.

Using headless Chrome on your local computer is easy. But scaling to dozens of Chrome in production is a difficult task. There are many problems, you need powerful servers with plenty of ram, you’ll get into random crashes, zombie processes …

The other big problem is IP-rate limits. Lots of websites are using both IP rate limits and browser fingerprinting techniques. Meaning you’ll need a huge proxy pool, rotate user agents and all kinds of things to bypass those protections.

Scrapingbee can also be used to submit forms.

ScrapingBee solves all these problems with a simple API call.

Features

Large proxy pool, datacenter and residential proxies

Javascript rendering with headless Chrome

Ready-made APIs to scrape popular websites like Google and Instagram

IP geolocation

Execute any Javascript snippet

Top-notch support. At ScrapingBee we treat customers as real human beings, not ticket numbers.

Price

$29/mo for 250 000 credits

$99/mo for 1 000 000 credits

$199/mo for 2 500 000 credits

ScrapingBee is a really good choice for freelancers, small and medium businesses that don’t want to handle the web scraping infrastructure themselves.

It can greatly reduce the cost of renting the proxies yourself and/or deploying and managing headless browsers in the cloud.

Octoparse Web Scraper

Octoparse is a powerful, yet free web scrapper with a wealth of comprehensive features. Besides, the tool has free unlimited pages that you can scrap in a day. Additionally, the tool simulates human scrapping process, as a result, the whole process of scrapping is easy and smooth to operate. Even if you have no clue about programming, you can still use this tool.

Its point and click interface allows one to easily choose the fields that they want to scrap from a website. Besides, it can handle both static and dynamic website with JavaScript, AJAX, cookies among others. Moreover, the application also provides a cloud-based platform that allows you to extract large amounts of data.

Once you scrap the data, you can export in TXT, CSV, XLSX or HTML formats depending on how you want to organize your data. Although the free version of the up allows you to build up to 10 crawlers, with the paid subscription plan, you will get more features such as API and different anonymous IP proxies that will help you fasten the extraction process and fetch large volumes of data in real time.

Features

• Has an Ad blocking technique that helps you extract data from Ad-heavy pages and sites.

• The tool offers support to mimic a human user while visiting and scraping data from specific websites.

• Octoparse allows you to run an extraction on the cloud and your local machine all at the same time.

• Allows you to export all types of data.

Pros:

• The tool has an off-the-shelf guideline as well as YouTube tutorials that you can use to learn how to use the tool.

• Has inbuilt task templates with at least 10 scrawls.

Cons

• Unfortunately, the tool allows you to export data in different file formats, except in PDF which is a drawback.

Helium Scraper

Helium Scraper is an all-in-one Windows application that combines a point-and-click editor and a set of off-screen browsers where extractions run. An advantage of this approach is that extractions run locally and data gets directly stored in the local machine. This also implies that monthly payments are not required, and there’s no limit on the amount of data that can be captured.

Agents can be created by selecting sample elements on the built-in browser to produce selectors. Unlike other web scrapers, these selectors don’t just use CSS or XPath, but a robust algorithm that identifies elements even when their similarity is small. These selectors can then be used in any of the predefined commands, which can be added, one after another, to perform specific actions, such as clicking elements, selecting menu items, turning pages, extracting data, and much more.

On the other hand, complex actions can be defined by combining existing ones, using variables, and incorporating JavaScript code. This makes Helium Scraper a very versatile web scraper, that can be easily configured to extract from simple web sites, but can also be adjusted to handle more complex scenarios.

Import.io

Import.io has a great set of web scraping tools that cover all different levels. If you’re short on time, you can try their Magic tool, which will convert a website into a table with no training. For more complex websites, you’ll need to download their desktop app, which has an ever-increasing range of features, including web crawling, website interactions and secure log ins.

Once you’ve built your API, they offer several simple integration options such as Google Sheets, Plot.ly, and Excel as well as GET and POST requests. When you consider that all this comes with a free-for-life price tag and an awesome support team, import.io is a clear first port of call for those on the hunt for structured data. They also offer a paid enterprise-level option for companies looking for more large-scale or complex data extraction

Content Grabber

Content Grabber is an enterprise-level web scraping tool. It is extremely easy to use, scalable and incredibly powerful. It has all the features you find in the best tools, plus much more. It is the next evolution in web scraping technology.

Content Grabber can handle the difficult sites other tools fail to extract data from. Content Grabber includes web crawler functionality, integrated with Google Docs, Google Sheets and DropBox and the ability to extract data to almost any database, including direct to custom data structures.

The visual editor has a simple point & click interface. It automatically detects and configures the required commands, facilitating decreased development effort and improved agent quality. Centralized management tools are included for scheduling, database connections, proxies, notifications and script libraries. The dedicated web API makes it easy to run agents and process extracted data on any website.

There’s also a sophisticated API for integration with 3rd party software.It enables you to produce stand-alone web scraping agents which you can market and sell as your own royalty free. Content Grabber is the only web scraping software scraping.pro gives 5 out of 5 stars on their Web Scraper Test Drive evaluations. You can own Content Grabber outright or take out a monthly subscription.

HarvestMan

[ Free Open Source]

HarvestMan is a web crawler application written in the Python programming language. HarvestMan can be used to download files from websites, according to a number of user-specified rules. The latest version of HarvestMan supports as much as 60 plus customization options.

HarvestMan is a console (command-line) application. HarvestMan is the only open source, multithreaded web-crawler program written in the Python language. HarvestMan is released under the GNU General Public License.Like Scrapy, HarvestMan is truly flexible however, your first installation would not be easy.

Scraperwiki [Commercial]

Using minimal programming, you will be able to extract anything. Of course, you can also request a private scraper if you want to protect an exclusive in there. In other words, it’s a marketplace for data scraping.

Scraperwiki is a site that encourages programmers, journalists, and anyone else to take online information and turn it into legitimate datasets. It’s a great resource for learning how to do your own “real” scrapes using Ruby, Python or PHP. But it’s also a good way to cheat the system. You can search the existing scrapes to see if your target website has already been done. But there’s another cool feature where you can request new scrapers be built. All in all, a fantastic tool for learning more about scraping and getting the desired results while sharpening your skills.

Best use: Request help with a scrape, or find a similar scrape to adapt for your purposes.

FiveFilters.org [Commercial]

Is an online web scraper available for commercial use. Provides easy content extraction using Full-Text RSS tool which can identify and extract web content (news articles, blog posts, Wikipedia entries, and more) and return it in an easy to parse format. Advantages; speedy article extraction, Multi-page support, has a Autodetection and you can deploy on the cloud server without a database required.

Kimono [Update – now discontinued]

Produced by Kimono labs this tool lets you convert data to into apis for automated export. Benjamin Spiegel did a great Youmoz post on how to build a custom ranking tool with Kimono, well worth checking out!

Mozenda [Commercial]

This is a unique tool for web data extraction or web scraping.Designed for easiest and fastest way of getting data from the web for everyone. It has a point & click interface and with the power of the cloud you can scrape, store, and manage your data all with Mozenda’s incredible back-end hardware. More advance, you can automate your data extraction leaving without a trace using Mozenda’s anonymous proxy feature that could rotate tons of IP’s .

Need that data on a schedule? Every day? Each hour? Mozenda takes the hassle out of automating and publishing extracted data. Tell Mozenda what data you want once, and then get it however frequently you need it. Plus it allows advanced programming using REST API the user can connect directly Mozenda account.

Mozenda’s Data Mining Software is packed full of useful applications, especially for salespeople. You can do things like “lead generation, forecasting, acquiring information for establishing budgets, and competitor pricing analysis. This software is a great companion for marketing plan & sales plan creating.

Using Refine Capture text tool, Mozenda is smart enough to filter the text you want stay clean or get the specific text or split them into pieces.

80Legs [Commercial]

The first time I heard about 80Legs my mind really got confused of what really this software does. 80Legs like Mozenda is a web-based data extraction tool with customizable features:

- Select which websites to crawl by entering URLs or uploading a seed list

- Specify what data to extract by using a pre-built extractor or creating your own

- Run a directed or general web crawler

- Select how many web pages you want to crawl

- Choose specific file types to analyze

80 legs offers customized web crawling that lets you get very specific about your crawling parameters, which tell 80legs what web pages you want to crawl and what data to collect from those web pages and also the general web crawling which can collect data like web page content, outgoing links, and other data. Large web crawls take advantage of 80legs’ ability to run massively parallel crawls.

Also crawls data feeds and offers web extraction design services. (No installation needed)

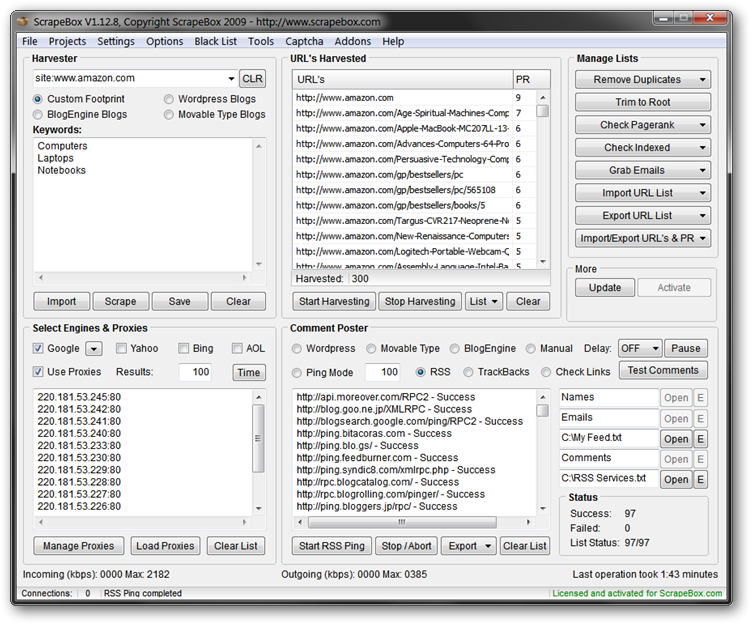

ScrapeBox [Commercial]

ScrapeBox is most popular web scraping tools to SEO experts, online marketers and even spammers with its very user-friendly interface you can easily harvest data from a website;

- Grab Emails

- Check page rank

- Checked high value backlinks

- Export URLS

- Checked Index

- Verify working proxies

- Powerful RSS Submission

Using thousands of rotating proxies, you can sneak on the competitor’s site keywords, do research on .gov sites, harvesting data, and commenting without getting blocked.

The latest updates allow the users to spin comments and anchor text to avoid getting detected by search engines.

You can also check out my guide to using Scrapebox for finding guest posting opportunities:

Scrape.it [Commercial]

Using a simple point & click Chrome Extension tool, you can extract data from websites that render in javascript. You can automate filling out forms, extract data from popups, navigate and crawl links across multiple pages, extract images from even the most complex websites with very little learning curve. Schedule jobs to run at regular intervals.

When a website changes layout or your web scraper stops working, scrape.it will fix it automatically so that you can continue receiving data uninterrupted without the need for you to recreate or edit it yourself.

They work with enterprises using our own tool that we built to deliver fully managed solutions for competitive pricing analysis, business intelligence, market research, lead generation, process automation and compliance & risk management requirements.

Features:

- Very easy web date extraction with Windows like Explorer interface

- Allowing you to extract text, images and files from modern Web 2.0 and HTML5 websites which uses Javascript & AJAX.

- The user could select what features they’re going to pay with

- lifetime upgrade and support at no extra charge on a premium license

Scrapy [Free Open Source]

Off course the list would not be cool without Scrapy, it is a fast high-level screen scraping and web crawling framework used to crawl websites and extract structured data from their pages. It can be used for many purposes, from data mining to monitoring and automated testing.

Features:

· Design with simplicity- Just write the rules to extract the data from web pages and let Scrapy crawl the entire website. It can crawl 500 retailers’ sites daily.

· Ability to attach new code for extensibility without having to touch the framework core

· Portable, open-source, 100% Python- Scrapy is completely written in Python and runs on Linux, Windows, Mac and BSD

· Scrapy comes with lots of functionality built in.

· Scrapy is extensively documented and has a comprehensive test suite with very good code coverage

· Good community and commercial support

Cons: The installation process is hard to perfect especially for beginners

Needlebase [Commercial]

Many organizations, from private companies to government agencies, store their info in a searchable database that requires you to navigate a list page listing results and a detail page with more information about each result.

Grabbing all this information could result in thousands of clicks, but as long as it fits the same formula, Needlebase can do it for you. Point and click on example data from one page once to show Needlebase how your site is structured, and it will use that pattern to extract the information you’re looking for into a dataset. You can query the data through Needle’s site or output it as a CSV or file format of your choice. Needlebase can also rerun your scraper every day to continuously update your dataset.

OutwitHub [Free]

This Firefox extension is one of the more robust free products that exists Write your own formula to help it find the information you’re looking for, or tell it to download all the PDFs listed on a given page. It will suggest certain pieces of information it can extract easily, but it’s flexible enough for you to be very specific in directing it.

The documentation for Outwit is especially well written, they even have a number of tutorials for what you might be looking to do. So if you can’t easily figure out how to accomplish what you want, investing a little time to push it further can go a long way.

Best use: more text

How to Extract Links from a Web Page with OutWit Hub

In this mini tutorial we will learn how to extract links from a webpage with OutWit Hub.

Sometimes extracting all links from a given web page can be useful. OutWit Hub is the easiest way to achieve this goal.

1. Launch OutWit Hub

If you haven’t installed OutWit Hub yet, please refer to the Getting Started with OutWit Hub tutorial.

Begin by launching OutWit Hub from Firefox. Open Firefox then click on the OutWit Button in the toolbar.

If the icon is not visible go to the menu bar and select Tools -> OutWit -> OutWit Hub

OutWit Hub will open displaying the Web page currently loaded on Firefox.

2. Go to the Desired Web Page

In the address bar, type the URL of the Website.

Go to the Page view where you can see the Web page as it would appear in a traditional browser.

Now, select “Links” from the view list.

In the “Links” widget, OutWit Hub displays all the links from the current page.

If you want to export results to Excel, select all links using ctrl/cmd + A, then copy using ctrl/cmd + C and paste it in Excel (ctrl/cmd + V).

irobotsoft [Free}

This is a free program that is essentially a GUI for web scraping. There’s a steep learning curve to figure out how to work it, and the documentation references an old version of the software. It’s the latest in a long tradition of tools that lets a user click through the logic of web scraping.

Generally, these are a good way to wrap your head around the moving parts of a scrape, but the products have drawbacks of their own that makes them little easier than doing the same thing with scripts.

Cons: The documentation seems outdated

Best use: Slightly complex scrapes involving multiple layers.

iMacros [Free]

The same ethos as how Microsoft macros works, iMacros automates repetitive tasks.Whether you choose the website, Firefox extension, or Internet Explorer add-on flavor of this tool, it can automate navigating through the structure of a website to get to the piece of info you care about.

Record your actions once, navigating to a specific page, and entering a search term or username where appropriate. Especially useful for navigating to a specific stock you care about, or campaign contribution data that’s mired deep in an agency website and lacks a unique Web address. Extract that key piece (pieces) of info into a usable form. Can also help convert Web tables into usable data, but OutwitHub is more suited to that purpose. Helpful video and text tutorials enable you to get up to speed quickly.

Best use: Eliminate repetition in navigating to a particular data point in a website that you’re checking up on often by recording a repeatable action that pulls the datapoint out of the clutter it’s naturally surrounded by.

InfoExtractor [Commercial]

This is a neat little web service that generates all sorts of information given a list of URLs. Currently, it only works for YouTube video pages, YouTube user profile pages, Wikipedia entries, HuffingtonPost posts, Blogcatalog blog posts and The Heritage Foundation blog (The Foundry). Given a url, the tool will return structured information including title, tags, view count, comments, etc.

Google Web Scraper [Free]

A browser-based web scraper works like Firefox’s Outwit Hub, it’s designed for plain text extraction from any online pages and export to spreadsheets via Google docs. Google Web Scraper can be downloaded as an extension and installed in your Chrome browser in seconds. To use it: highlight a part of the webpage you’d like to scrape, right-click and choose “Scrape similar…”. Anything similar to what you highlighted will be rendered in a table ready for export, compatible with Google Docs™. The latest version still had some bugs on spreadsheets.

Cons: It doesn’t work for images, and sometimes it can’t perform well on huge volumes of text, but it’s easy and fast to use.

Tutorials:

Scraping Website Images Manually using Google Inspect Elements

The main purpose of Google Inspect Elements is for debugging like the Firefox Firebug however if you’re flexible, you can use this tool also for harvesting images in a website. Your main goal is to get specific images like web backgrounds, buttons, banners, header images, and product images which is very useful for web designers.

Now, this is a very easy task. First, you will need to download and install the Google Chrome browser in your computer. After the installation do the following:

1. Open the desired webpage in Google Chrome

2. Highlight any part of the website and right click > choose Google Inspect Elements

3. In the Google Inspect Elements, go to Resources tab

4. Under Resources tab, expand all folders. You will eventually see script folders and IMAGES folders

5. In the Images folders, just use arrow keys to find the images you need to have (see the screenshot above)

6. Next, right click the images and choose Open the Image in New Tab

7. Finally, right click the image > choose Save Image As… . (save to your local folder)

You’re done!

Webhose.io (freemium)

The Webhose free plan will give you 1000 free requests per month, which are pretty decent. Webhose lets you use their APIs to pull in data from many different sources, which is perfect if you are searching for mentions. The software is good if you are looking to scrape lots of different sites of specific terms, as opposed to scraping specific sites.

Apify

Apify is a startup that provides comprehensive web scraping services to businesses and individuals. The platform offers a range of tools and services designed to help users extract and analyze data from websites, allowing them to gain valuable insights into various industries and markets.

The site runs by creating ‘actors’ to perform the required task and integrate with other platforms. There are already over 1000 custom-built scrapers that you can use.

Pricing for Apify’s services varies depending on the plan chosen, with a free plan available for basic use and paid plans starting at $49 per month for more advanced features and capabilities. With its user-friendly interface and powerful web scraping capabilities, Apify is an excellent choice for businesses and individuals looking to extract valuable data from the web.

Expired Domain Name Web Scrapers

SerpDrive – This software scrapes expired domains for you and is totally hassle free. You get one free search when you sign up then its only $12 to scrape you 50 high authority expired domains which you are free to register. For more domain scrapers and details, see my PBN toolkit. Check out the demo below:

Web Scraping FAQs

1. Is web scraping legal?

Web scraping occupies a legal grey area. It’s not illegal per se, but various factors determine its legality, such as the website’s terms of service, data privacy laws, the content being scraped, and the way the data is used. It’s crucial to consult legal counsel and adhere to all applicable laws and regulations, including copyright laws and the website’s terms and conditions, before scraping data.

2. Can you get banned for web scraping?

Yes, you can get banned for web scraping. Many websites have mechanisms in place to detect and prevent scraping. These include rate limiting, IP tracking, and captchas. If scraping activity is detected, especially if it’s causing strain on the server or violating terms of service, the website may block the offending IP address.

3. What are the risks of web scraping?

Risks associated with web scraping include legal repercussions, such as lawsuits for copyright infringement, trespass to chattels, or violation of terms of service. Technical risks involve getting IP addresses banned, encountering security measures like captchas, and negatively impacting the website’s server performance, which could lead to further legal action.

4. How hard is web scraping?

The difficulty of web scraping varies depending on the complexity of the website and the scope of data to be extracted. Basic scraping can be relatively simple with the right tools and scripts. However, websites with complex structures or anti-scraping measures require more advanced programming skills and knowledge of scraping technologies and techniques.

5. Does BBC allow web scraping?

The BBC generally does not allow web scraping of their content. Their terms of service prohibit the use of automated tools to access or gather data from their website. Violating these terms can result in legal action or restricted access to BBC content.

6. Does Google allow web scraping?

Google generally prohibits web scraping of their services as outlined in their terms of service. They have robust anti-scraping measures in place. However, Google does provide APIs like Google Custom Search JSON API, which can be used to retrieve search results programmatically and legally.

7. How do I hide my IP address when scraping a website?

Hiding your IP address when scraping can be done using proxy servers or VPNs. Proxies are the most common, as they can rotate IP addresses, making it appear as though requests come from different locations. This approach helps bypass anti-scraping measures that monitor and block IP addresses based on suspicious activity.

8. Why do websites block web scraping?

Websites block web scraping to protect their data, reduce bandwidth usage, and prevent potential copyright infringement or abuse of their services. Scraping can also overload servers, affecting a website’s service and functionality. By blocking scraping activities, websites seek to maintain control over how their data is accessed and distributed.

Gareth James has been in internet marketing since 2001. Gareth currently runs a portfolio of websites and works with a handful of clients.